🎊 Welcome to my website!

My name is Kai Li (Chinese name: 李凯). I’m a second-year PhD student at Department of Computer Science and Technology, Tsinghua University, supervised by Prof. Xiaolin Hu (胡晓林). I am also a member of TSAIL Group directed by Prof. Bo Zhang (张拨) and Prof. Jun zhu (朱军).

🤗 These works are open source to the best of my ability.

🤗 My research interest includes Speech/Music Separation, Multi-Modal Speech Separation, and Audio LLM. I have published many papers at the top conferences/journals such as NeurIPS/ICLR/TPAMI/ICASSP/Interspeech. If you want to collaborate, feel free to email me.

🔥 News

- 2025.12: 🎉 One paper (Oral) and two papers are accepted by AAAI 2026.

- 2025.10: 🎲 One paper is accepted by TGRS 2025.

- 2025.10: 🎲 One paper is accepted by TDSC 2025.

- 2025.09: 🎲 One paper is accepted by NeurIPS 2025.

- 2025.08: 🎉 Won the third prize 🥉 in the CCF Advanced Audio Technology Competition Task 2.

- 2025.08: 🎲 One paper is accepted by ASRU 2025.

- 2025.07: 🎲 One paper is accepted by ECAI 2025.

- 2025.06: 🎲 One paper is accepted by ICCV 2025.

- 2025.04: 🧩 Two paper is accepted by ICME 2025.

- 2025.01: 🧩 Two paper is accepted by ICLR 2025.

- 2024.12: 🎲 One paper is accepted by ICASSP 2025.

- 2024.12: 🎉 Outstanding Master's Thesis Award from China Society of Image and Graphics.

- 2024.09: 🎲 One paper is accepted by CCS 2024.

- 2024.08: 🎉 Best Student Presentation Award in NCMMSC 2024

- 2024.08: 🎲 One paper is accepted by Remote Sensing 2024.

- 2024.06: 🎉 I was awarded the Excellent Master Thesis of Tsinghua University

- 2024.06: 🎉 I was awarded Outstanding Graduate of Beijing

- 2024.05: 🧩 One paper is accepted by ICML 2024.

- 2024.04: 🎲 One paper is accepted by ISMIR 2024.

- 2024.04: 🎲 One paper is accepted by ICME 2024.

- 2024.03: 🎲 One paper is accepted by TPAMI 2024.

- 2024.01: 🎲 One paper is accepted by ICLR 2024.

- 2024.01: 🎲 One paper is accepted by TGRS 2024.

- 2023.12: 🧩 Two papers are accepted by ICASSP 2024.

- 2023.07: 🎲 One paper is accepted by ECAI 2023 (Oral).

- 2023.05: 🧩 Two papers are accepted by Interspeech 2023.

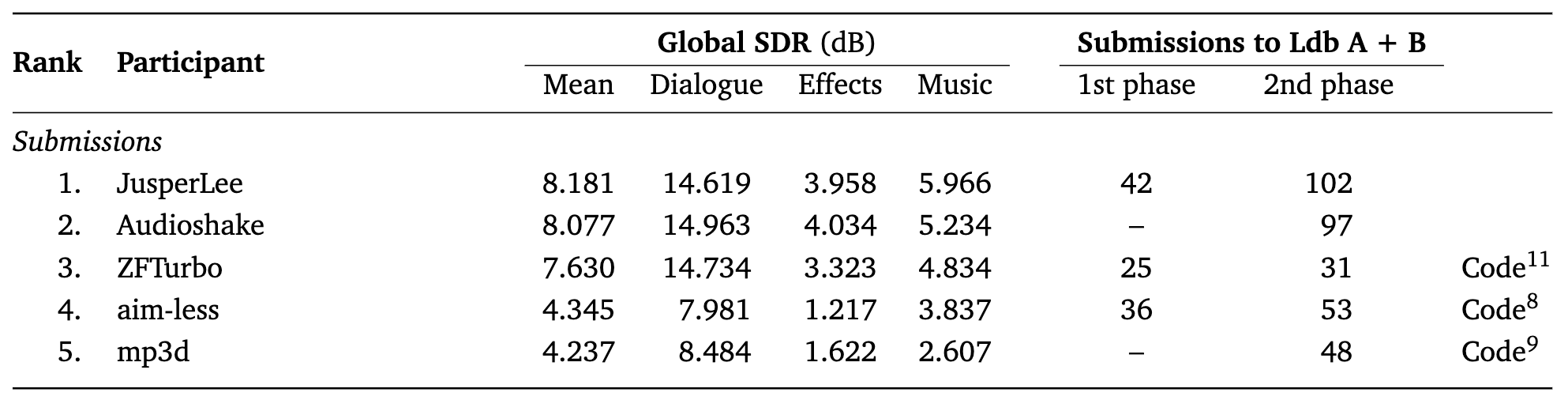

- 2023.05: 🎉 We won the first prize 🥇 of the Cinematic Sound Demixing Track 23 in the Leaderboard A and B.

- 2023.05: 🎉 We won the first prize 🥇 of the ASC23 and Best Application Award.

- 2023.02: 🧩 One paper is accepted by ICASSP 2023.

- 2023.01: 🧩 One paper is accepted by ICLR 2023.

- 2022.06: 🧩 One paper is accepted by Neural Computation.

- 2022.06: 🎉 One paper is accepted by InterSpeech 2022.

- 2022.06: 🎲 One paper is appeared by Arxiv.

- 2022.05: 🧩 One paper to submit in Nature Machine Intelligence.

- 2022.03: 🎉 We won the first prize 🥇 of the Global College Student Supercomputer Challenge (ASC22)

- 2022.03: 🧩 One paper to submit in IEEE Transactions on Industrial Informatics.

- 2022.03: 🧩🧩 Two paper to submit in Interspeech 2022.

- 2021.10: 🎉 paper is accepted by NeurIPS 2021

- 2021.05: 🎉 We won the 5% of the [Global College Student Supercomputer Challenge (ASC20-21)](http://www.asc-events.net/ASC20-21/Finals.php)

- 2021.01: 🎉 We won the first prize 🥇 of the [Global College Student Supercomputer Challenge (ASC20-21)](http://www.asc-events.net/ASC20-21/Finals.php)

- 2020.06: 🎉 Outstanding Bachelor Thesis Award, Qinghai University of Computer Science and Technology !

- 2020.06: 🎉 Outstanding Graduates, Qinghai University of Computer Science and Technology !

- 2020.04: 🧩 One paper is accepted by IET image processing

- 2020.01: 🏢 I am an algorithm intern at Moyin Technology

.

- 2019.11: 🧩 One paper is accepted by ISPA2019

- 2019.11: 🎉 We won the first prize 🥇 of the first "Ganqingning" Innovation and Entrepreneurship Competition !

- 2019.11: 🎉 I won the National Scholarship, Ministry of Education, China !

- 2019.05: 🎉 We won the second prize 🥈 in the Natural Academic Paper category of the National College Student Challenge Cup Qinghai Provincial Trial !

- 2019.05: 🎉 We won the first prize 🥇 in the Qinghai Division of the 6th National Youth Science Innovation Experiment and Work Competition !

- 2019.05: 🎉 One paper is accepted by ICDIP2019

- 2019.04: 🧩 I won the second prize 🥈 at the provincial level in the Blue Bridge Cup Java Group A!

- 2018.12: 🎉 We won the first prize 🥇 of natural academic paper in the first "Principals Cup" Innovation and Entrepreneurship Competition in Qinghai Province !

📝 Publications

( * equal contribution, # corresponding author)

2026

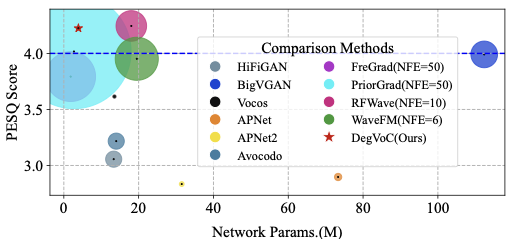

DegVoC: Revisiting Neural Vocoder from a Degradation Perspective Andong Li, Tong Lei, Lingling Dai, Kai Li, Rilin Chen, Meng Yu, Xiaodong Li, Dong Yu, Chengshi Zheng. AAAI 2026. Singapore, Singapore.

- DegVoC revisits neural vocoder from a degradation perspective, proposing a novel approach for high-quality speech synthesis.

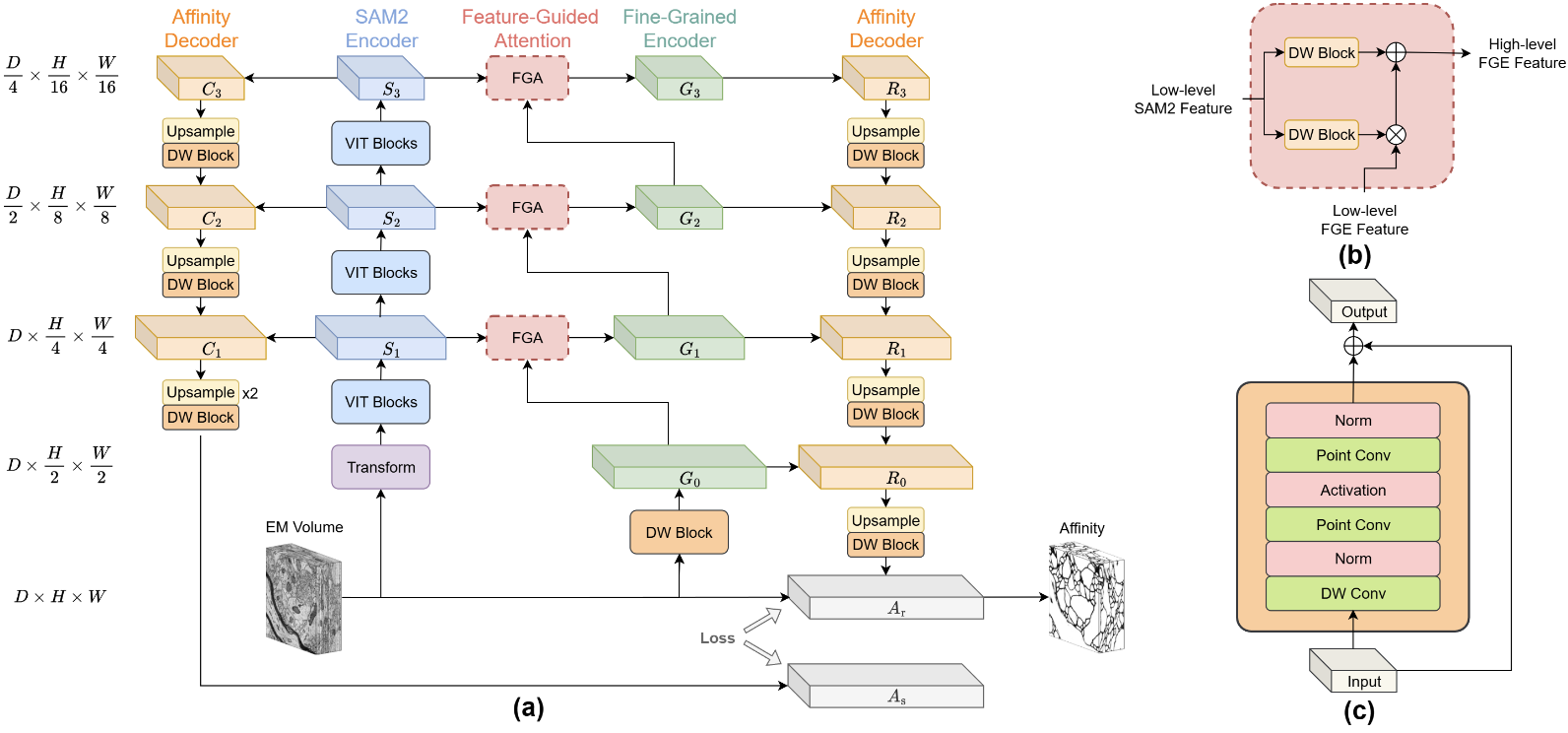

FGNet: Leveraging Feature-Guided Attention to Refine SAM2 for 3D EM Neuron Segmentation Zhenghua Li, Hang Chen, Zihao Sun, Kai Li, Xiaolin Hu. AAAI 2026. Singapore, Singapore.

- FGNet leverages feature-guided attention to refine SAM2 for accurate 3D electron microscopy neuron segmentation.

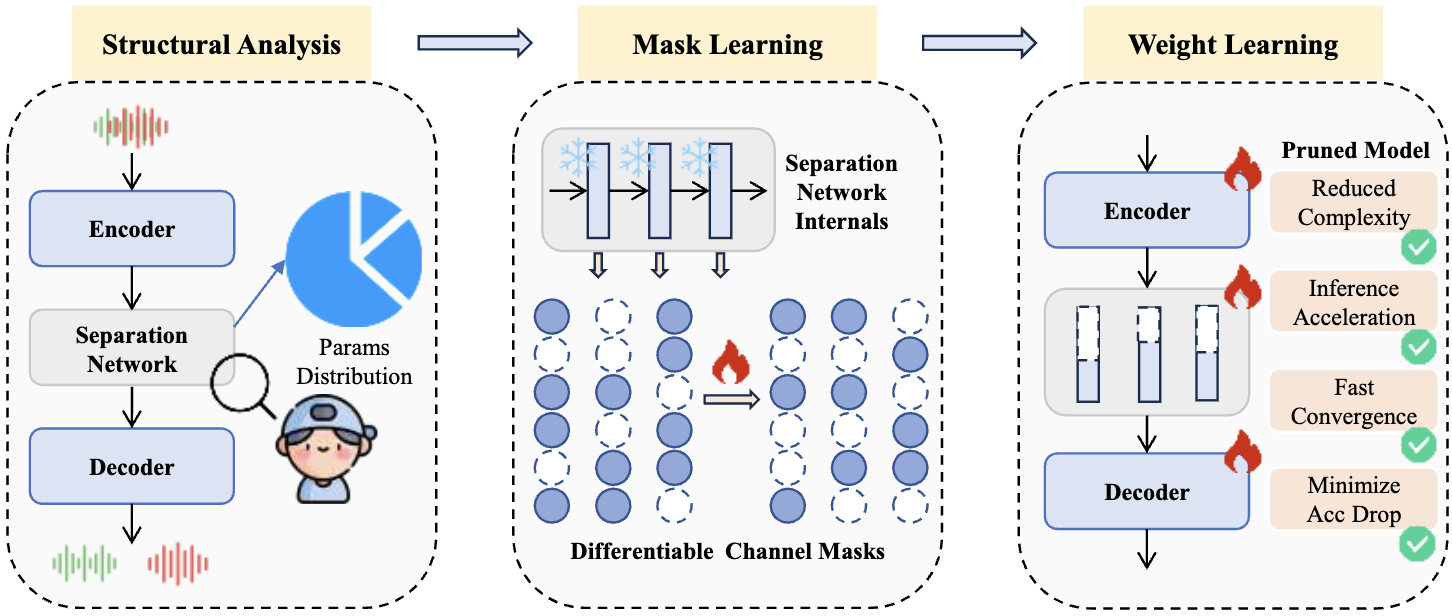

SepPrune: Structured Pruning for Efficient Deep Speech Separation Yuqi Li*, Kai Li*, Xin Yin, Zhifei Yang, Zeyu Dong, Zhengtao Yao, Haoyan Xu, Yingli Tian, Yao Lu. AAAI 2026. Singapore, Singapore.

2025

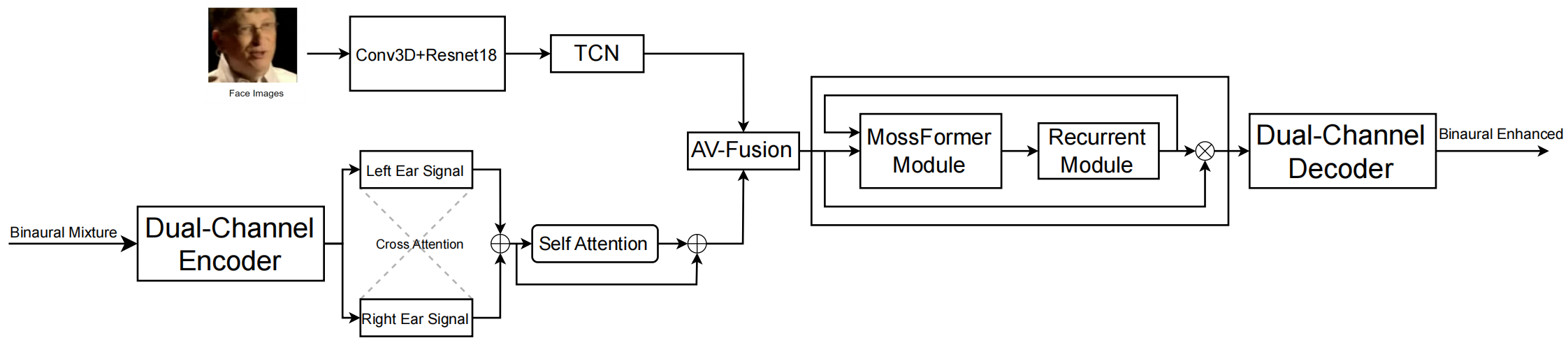

BAV-MossFormer2: Enhanced MossFormer2 for Binaural Audio-Visual Speech Enhancement Wenze Ren, Kai Li, Rong Chao, Junjie Li, Zilong Huang, Shafique Ahmed, You-Jin Li, Kuo-Hsuan Hung, Syu-Siang Wang, Hsin-Min Wang, Yu Tsao. AVSEC 2025. Rotterdam, Netherlands.

- BAV-MossFormer2 proposes an innovative architecture for binaural audio-visual speech enhancement, featuring an enhanced stereo audio encoder with cross-attention mechanisms for channel interaction and an adaptive dynamic fusion module for context-aware audio-visual feature fusion.

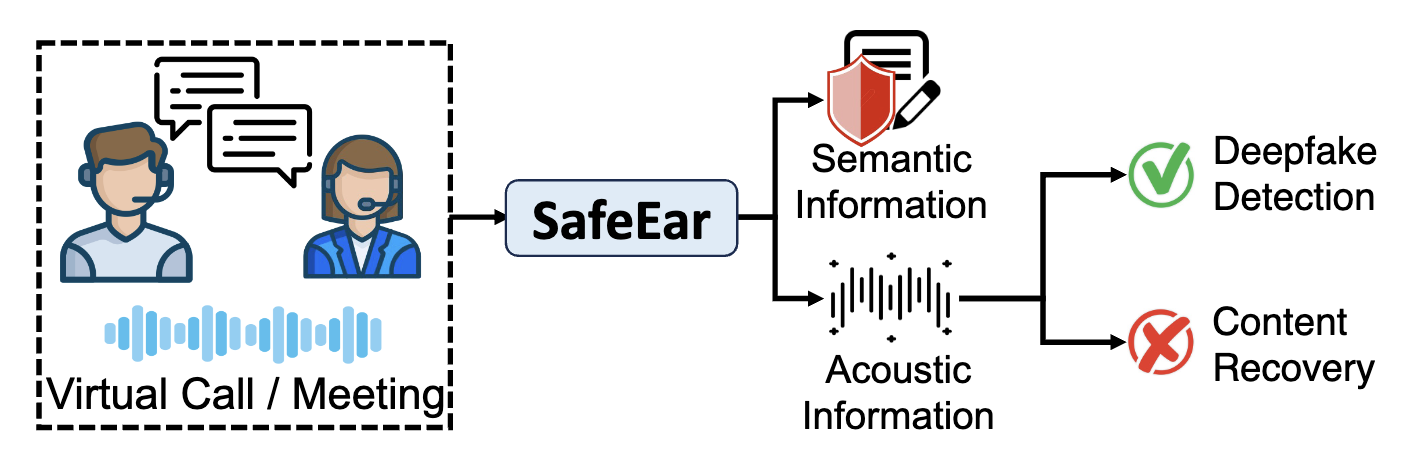

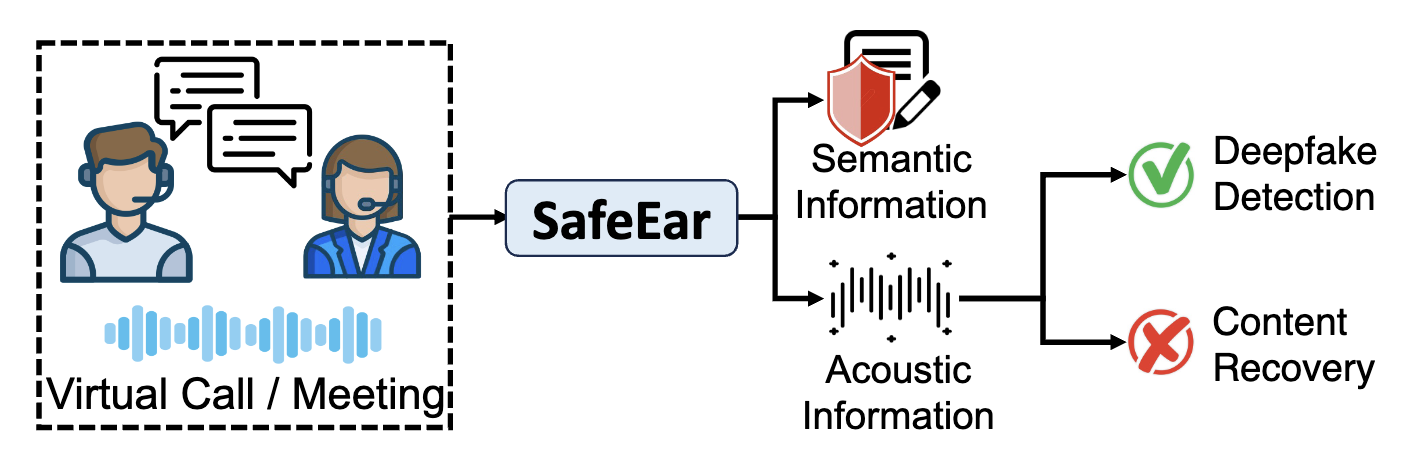

Critical Information Only: A Content Privacy-Preserving Framework for Detecting Audio Deepfakes Xinfeng Li, Yifan Zheng, Chen Yan, Kai Li, Chang Zeng, Xiaoyu Ji, Wenyuan Xu. IEEE Transactions on Dependable and Secure Computing (TDSC) 2025.

MMAR: A Challenging Benchmark for Deep Reasoning in Speech, Audio, Music, and Their Mix Ziyang Ma, Yinghao Ma, Yanqiao Zhu, Chen Yang, Yi-Wen Chao, Ruiyang Xu, Wenxi Chen, Yuanzhe Chen, Zhuo Chen, Jian Cong, Kai Li, et al. NeurIPS 2025. San Diego, California.

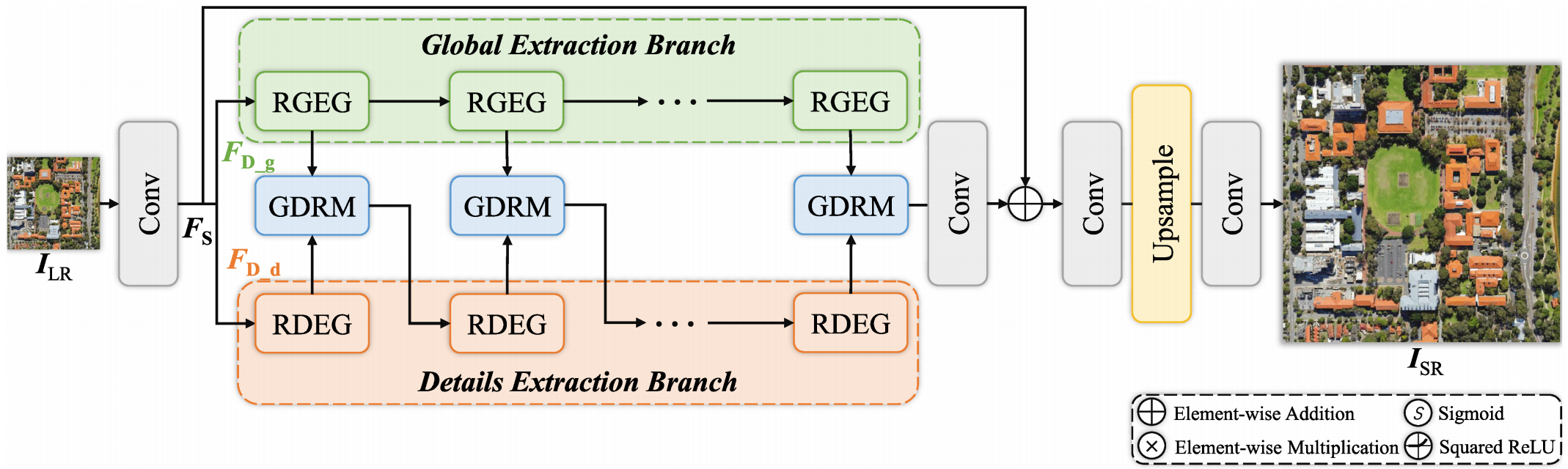

GDSR: Global-Detail Integration Through Dual-Branch Network With Wavelet Losses for Remote Sensing Image Super-Resolution Qiwei Zhu, Kai Li, Guojing Zhang, Xiaoying Wang, Jianqiang Huang, Xilai Li. IEEE Transactions on Geoscience and Remote Sensing (TGRS) 2025.

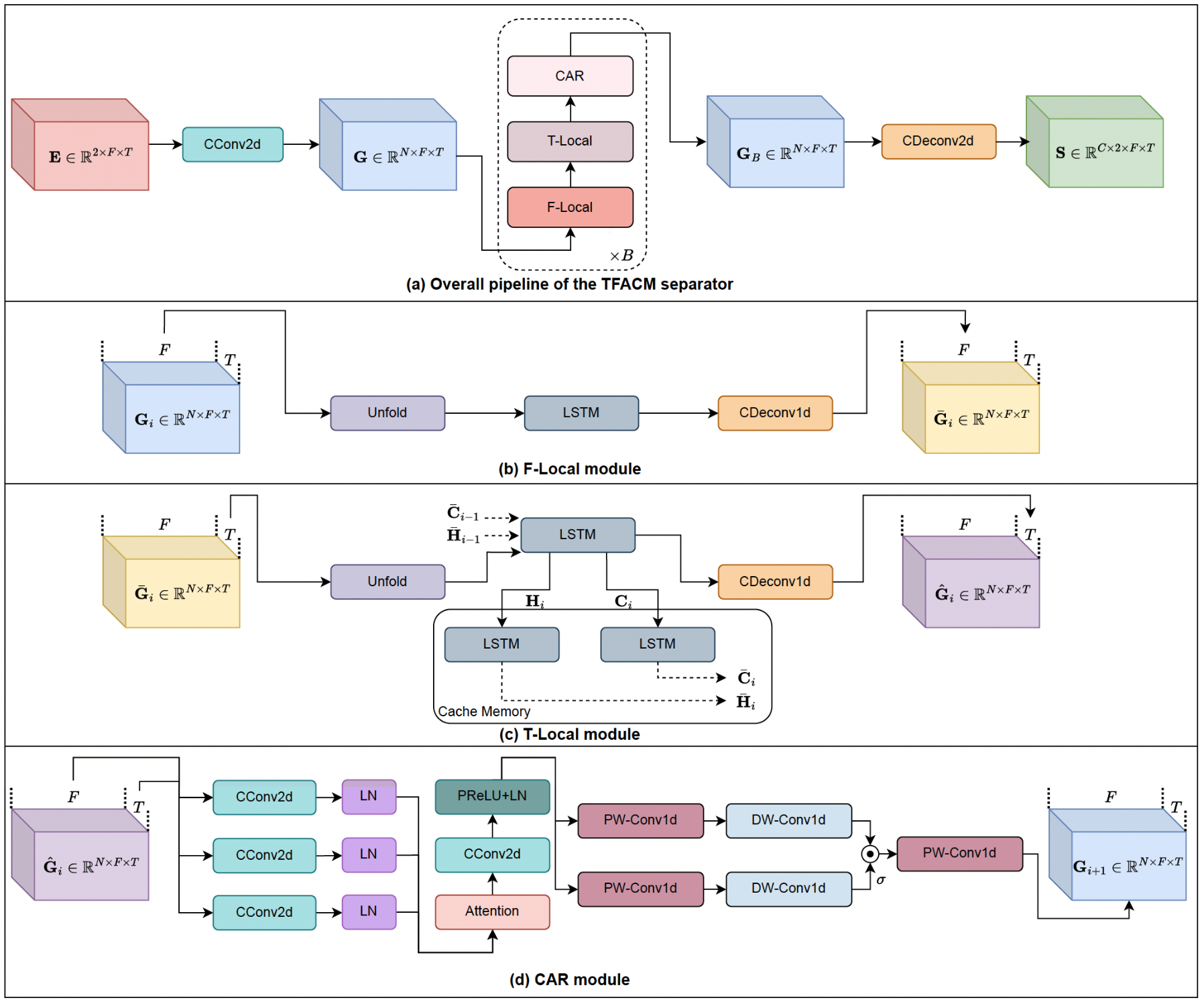

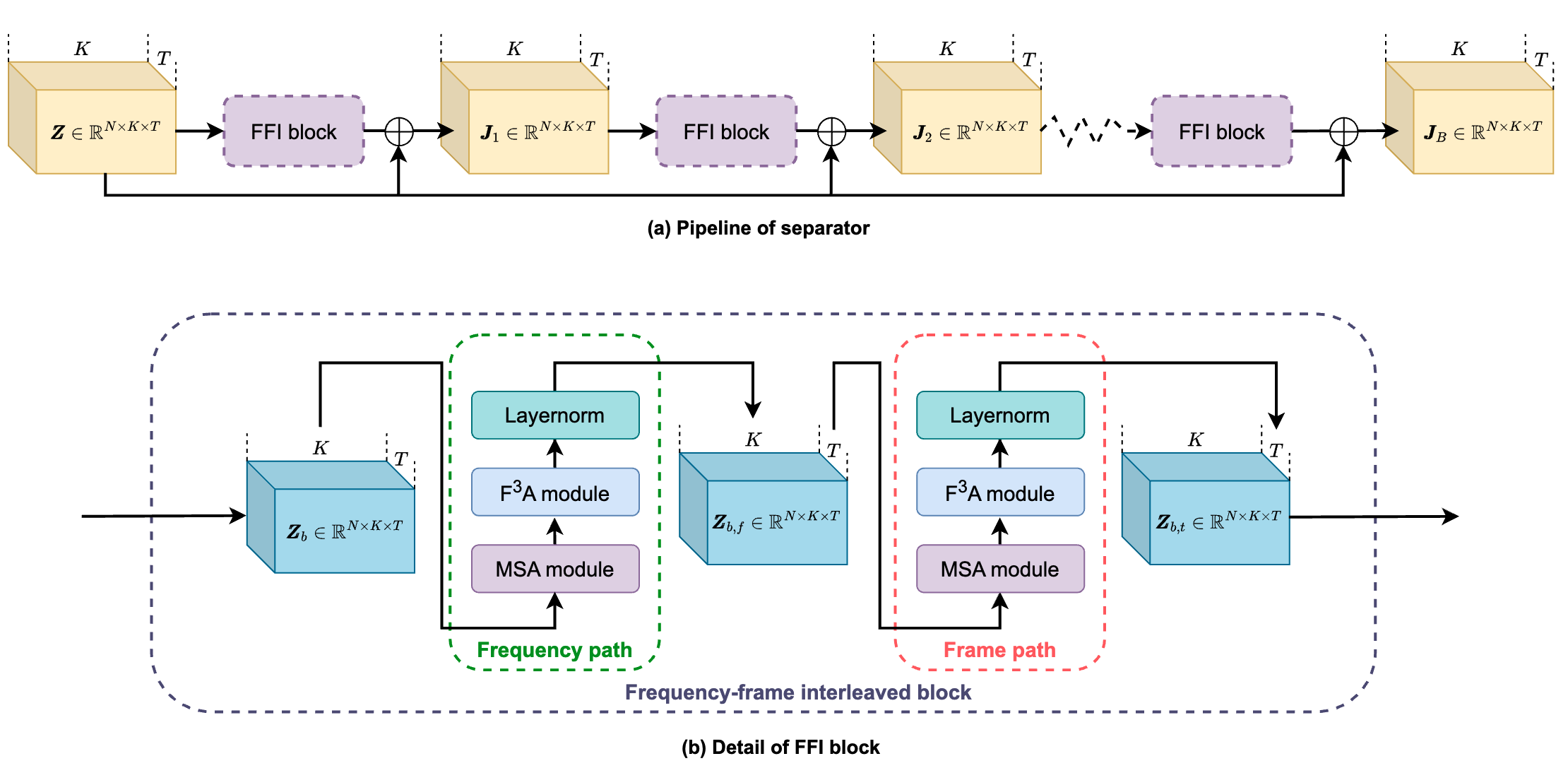

Time-Frequency-Based Attention Cache Memory Model for Real-Time Speech Separation Guo Chen*, Kai Li*, Runxuan Yang, Xiaolin Hu. ASRU 2025. Honolulu, Hawaii.

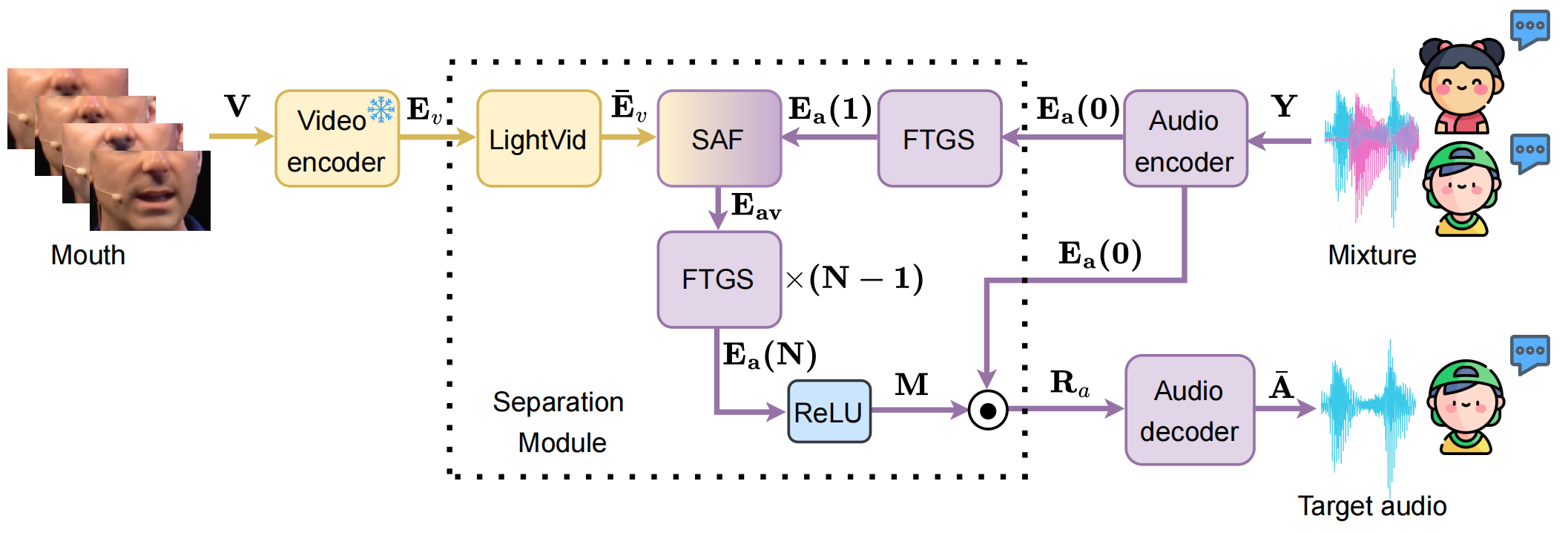

A fast and lightweight model for Causal Audio-Visual Speech Separation. Wendi Sang*, Kai Li*, Runxuan Yang, Jianqiang Huang, Xiaolin Hu. ECAI 2025. Bologna, Italy.

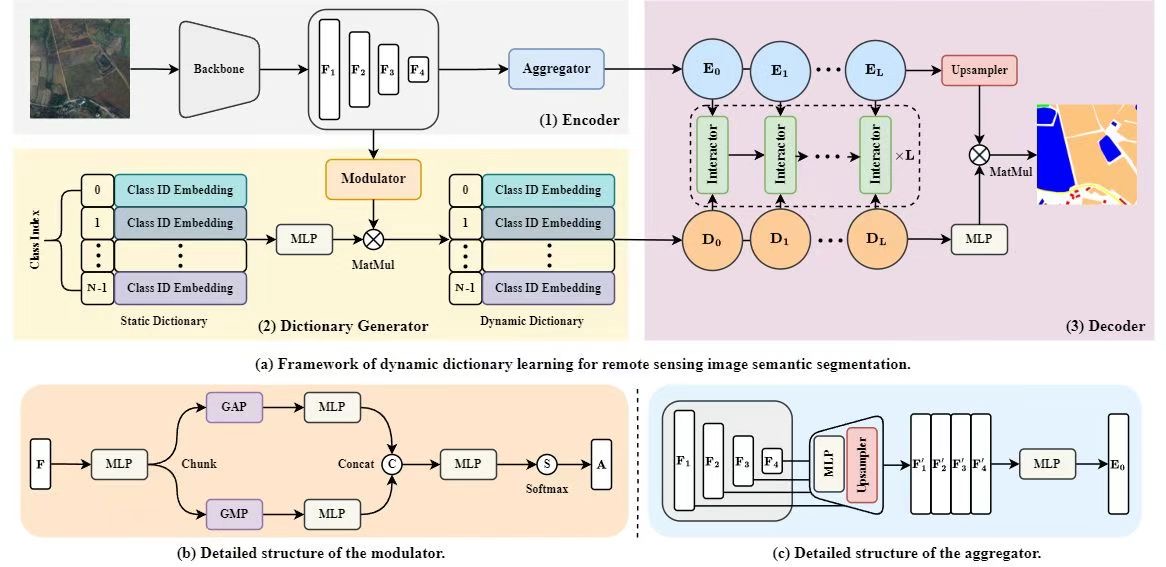

Dynamic Dictionary Learning for Remote Sensing Image Segmentation. Xuechao Zou, Yue Li, Shun Zhang, Kai Li, Shiying Wang, Pin Tao, Junliang Xing, Congyan Lang. ICCV 2025. Honolulu, Hawaii.

-

Tackling inter-class similarity, intra-class variability, and limited dynamic adaptability to scene changes (e.g., cloud thickness) in remote sensing segmentation, we propose a dynamic dictionary learning framework with multi-stage alternating cross-attention between image features and dictionary embeddings for dynamic refinement, plus a dictionary space contrastive constraint to boost intra-class compactness and inter-class separability—outperforming state-of-the-art on coarse/fine-grained datasets, especially LoveDA and UAVid online leaderboards.

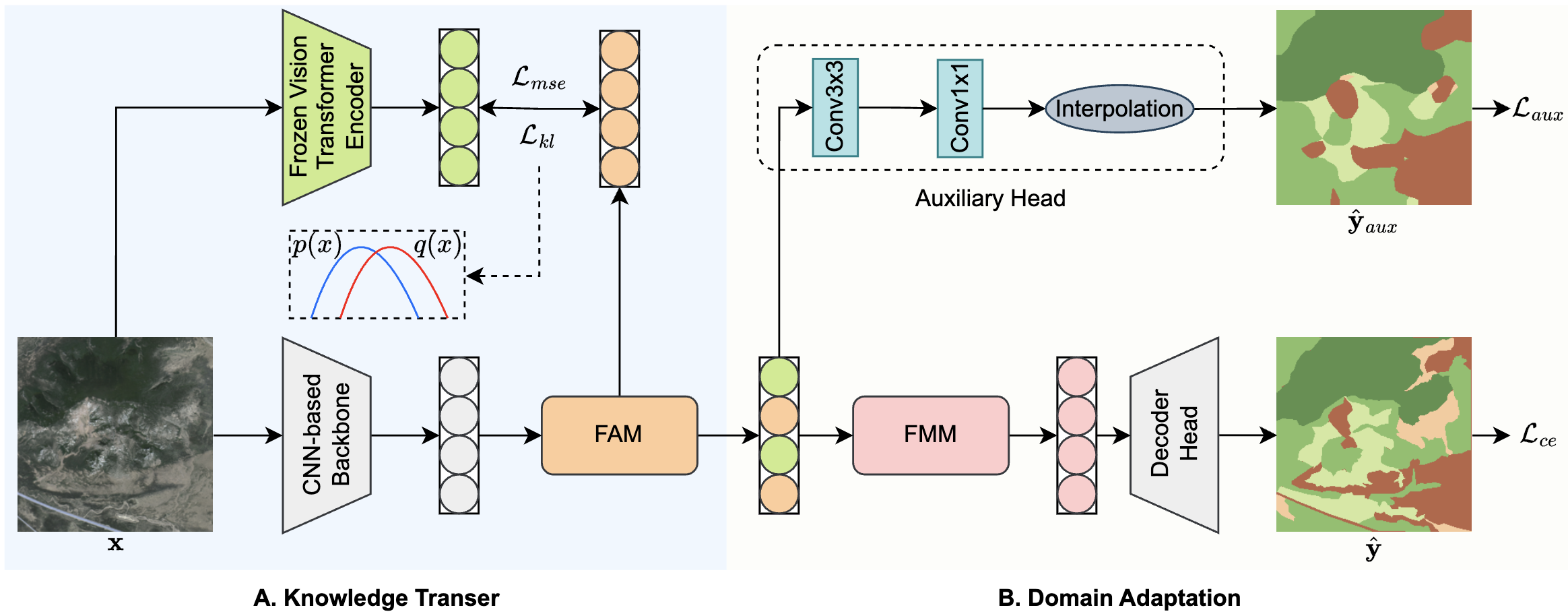

Knowledge Transfer and Domain Adaptation for Fine-Grained Remote Sensing Image Segmentation. Shun Zhang, Xuechao Zou, Kai Li, Congyan Lang, Shiying Wang, Pin Tao, Tengfei Cao. ICME 2025. Nantes, France.

SPMamba: State-space model is all you need in speech separation. Kai Li*, Guo Chen*, Runxuan Yang, Xiaolin Hu. ICME 2025. Nantes, France.

SonicSim: A customizable simulation platform for speech processing in moving sound source scenarios Kai Li, Wendi Sang, Chang Zeng, Guo Che, Runxuan Yang, Xiaolin Hu. ICLR 2025. Singapore EXPO.

-

SonicSim is a customizable simulation platform built on Habitat-sim, designed to generate high-fidelity, diverse synthetic data for speech separation and enhancement tasks involving moving sound sources, addressing the limitations of real-world and existing synthetic datasets in acoustic realism and scalability.

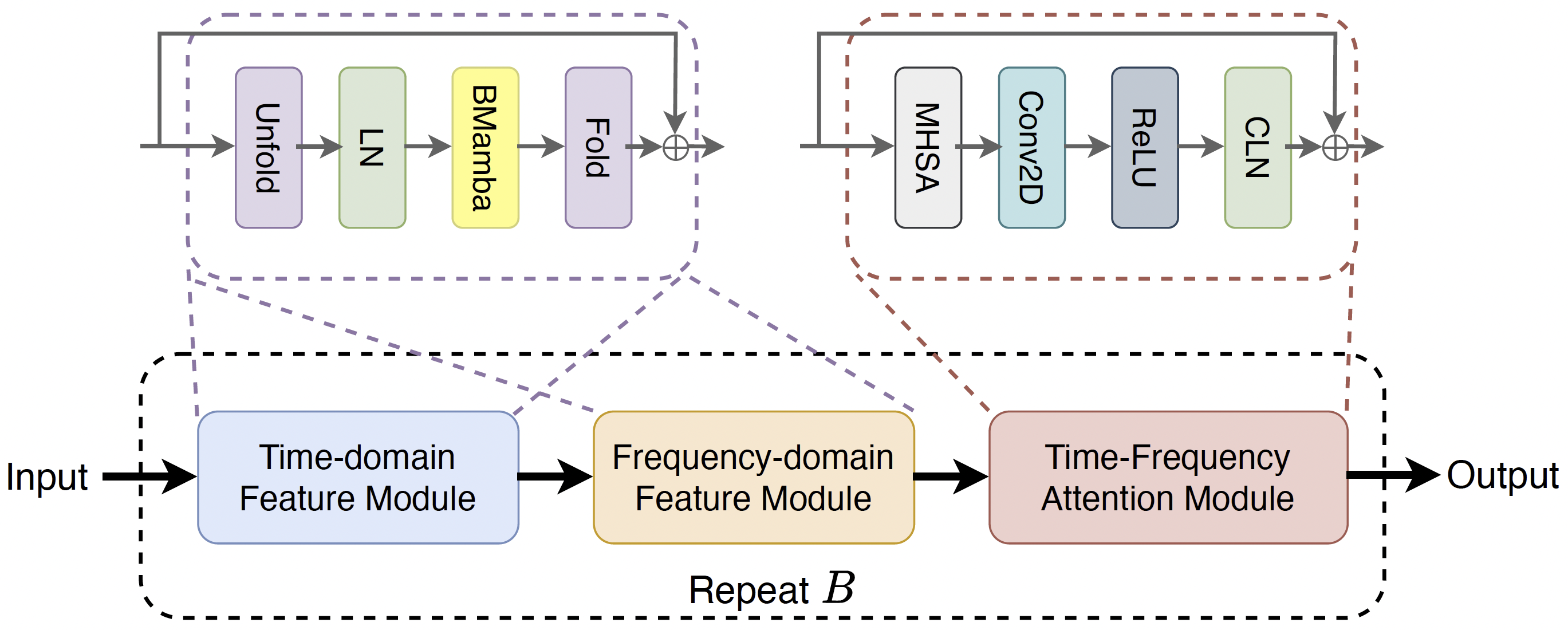

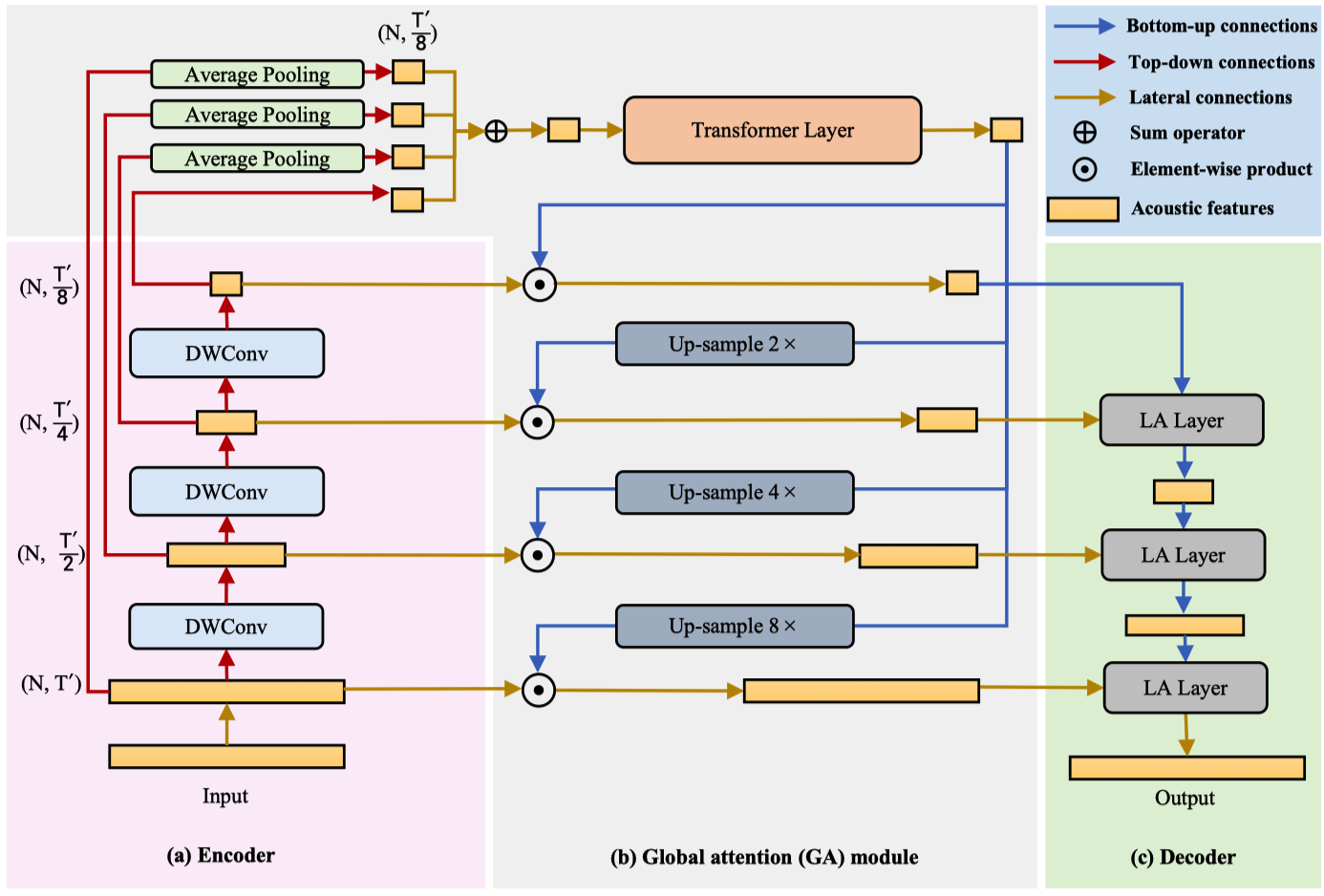

TIGER: Time-frequency Interleaved Gain Extraction and Reconstruction for Efficient Speech Separation Mohan Xu, Kai Li*, Guo Chen, Xiaolin Hu. ICLR 2025. Singapore EXPO.

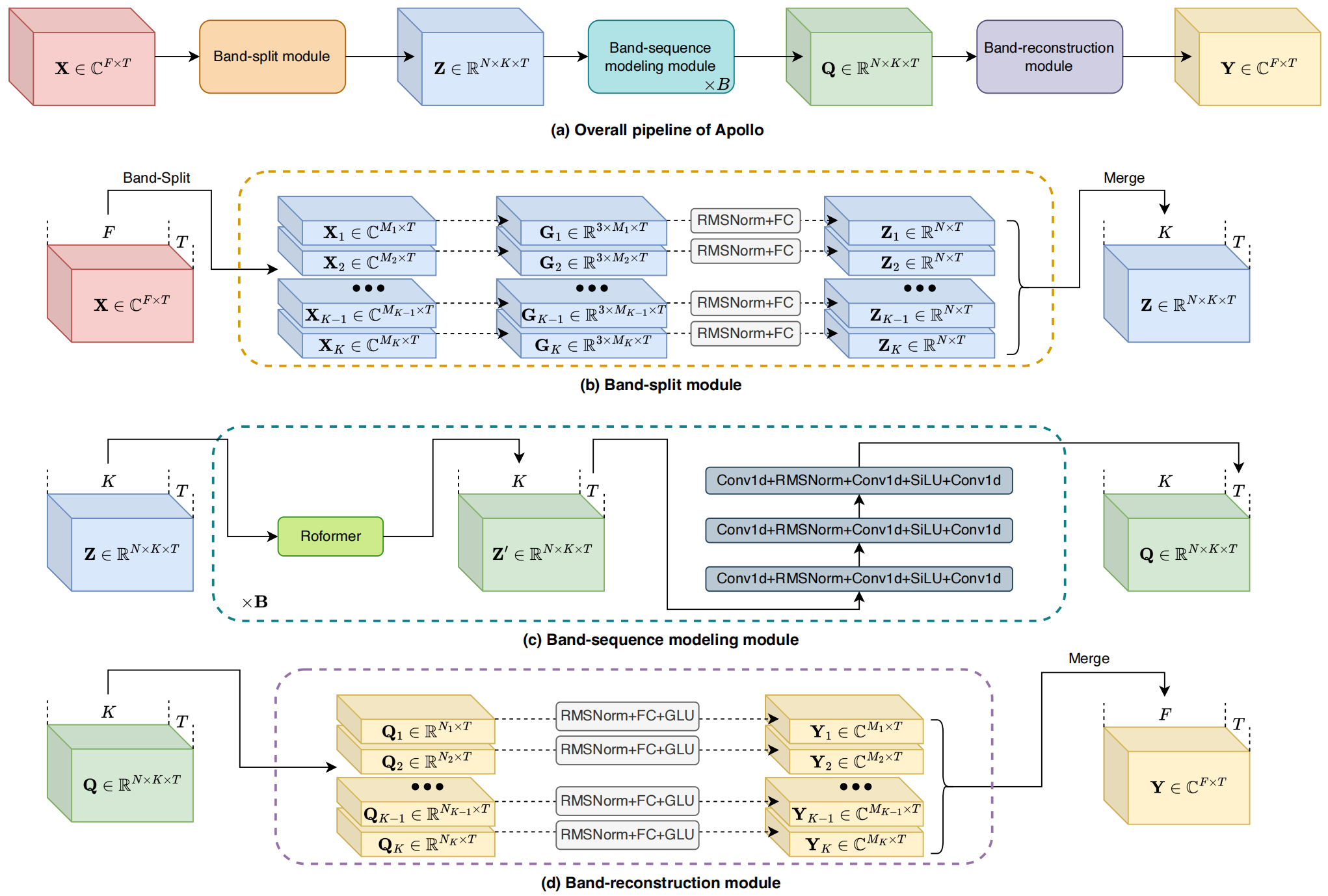

Apollo: Band-sequence Modeling for High-Quality Audio Restoration Kai Li, Yi Luo. ICASSP 2025. Hyderabad, India.

2024

SafeEar: Content Privacy-Preserving Audio Deepfake Detection Xinfeng Li, Kai Li*, Yifan Zheng, Chen Yan, Xiaoyu Ji, Wenyuan Xu. CCS 2024. Salt Lake City, U.S.A.

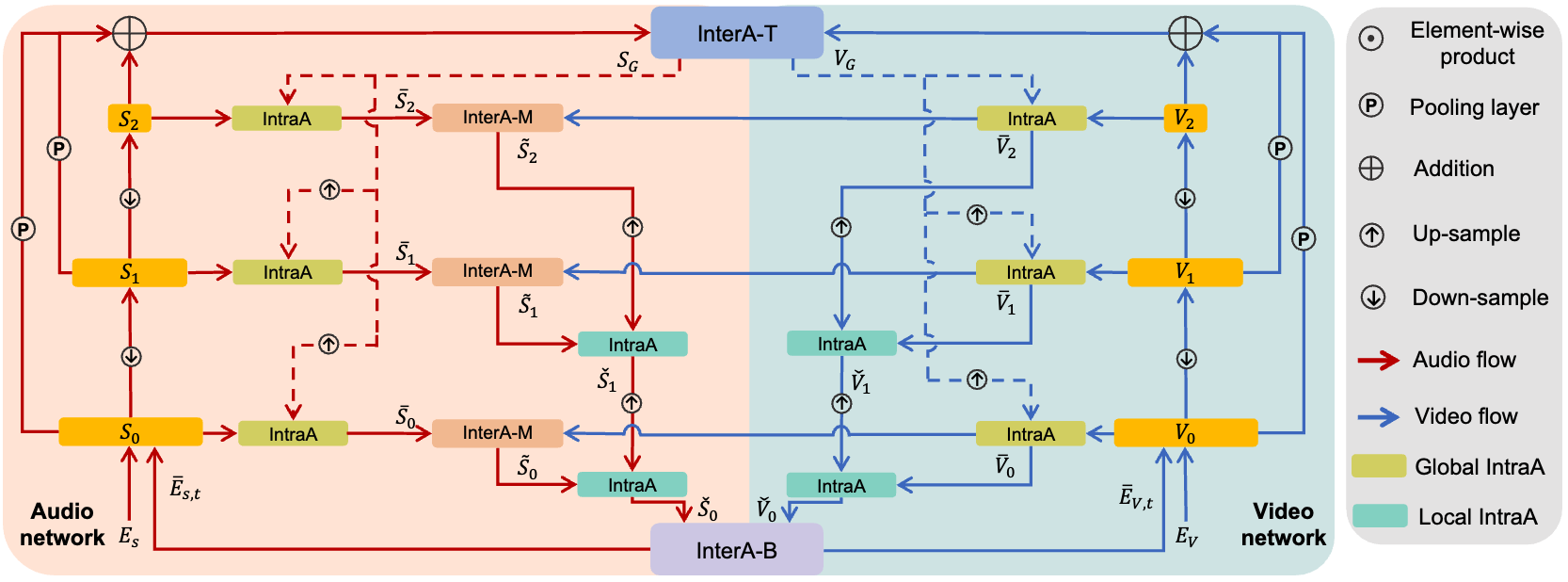

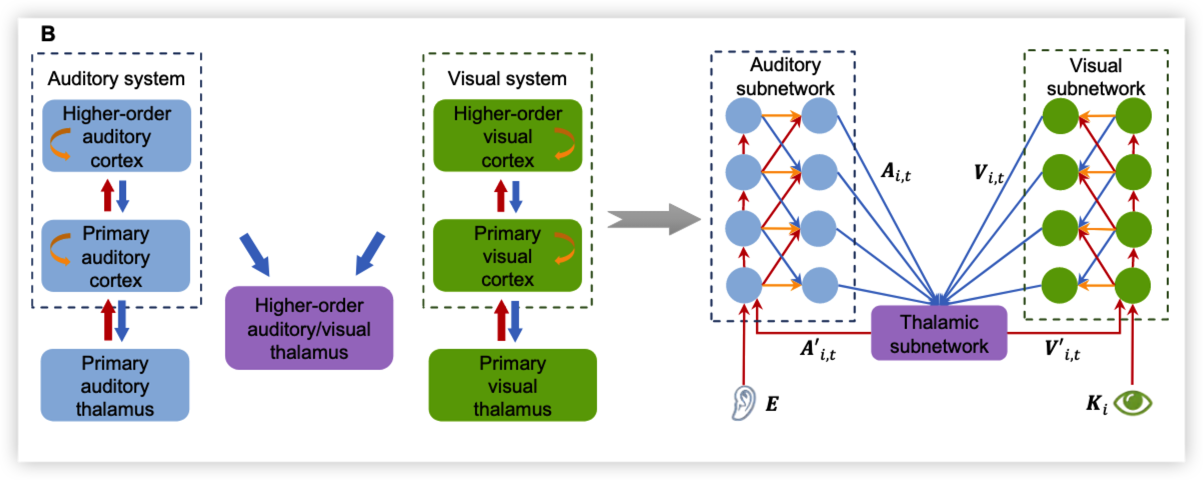

IIANet: an intra- and inter-modality attention network for audio-visual speech separation Kai Li, Runxuan Yang, Sun Fuchun, Xiaolin Hu. ICML 2024. Vienna, Austria.

-

Inspired by the cross-modal processing mechanism in the brain, we design intra- and inter-attention modules to integrate auditary and visual information for efficient speech separation. The model simulates audio-visual fusion in different levels of sensory cortical areas as well as higher association areas such as parietal cortex.

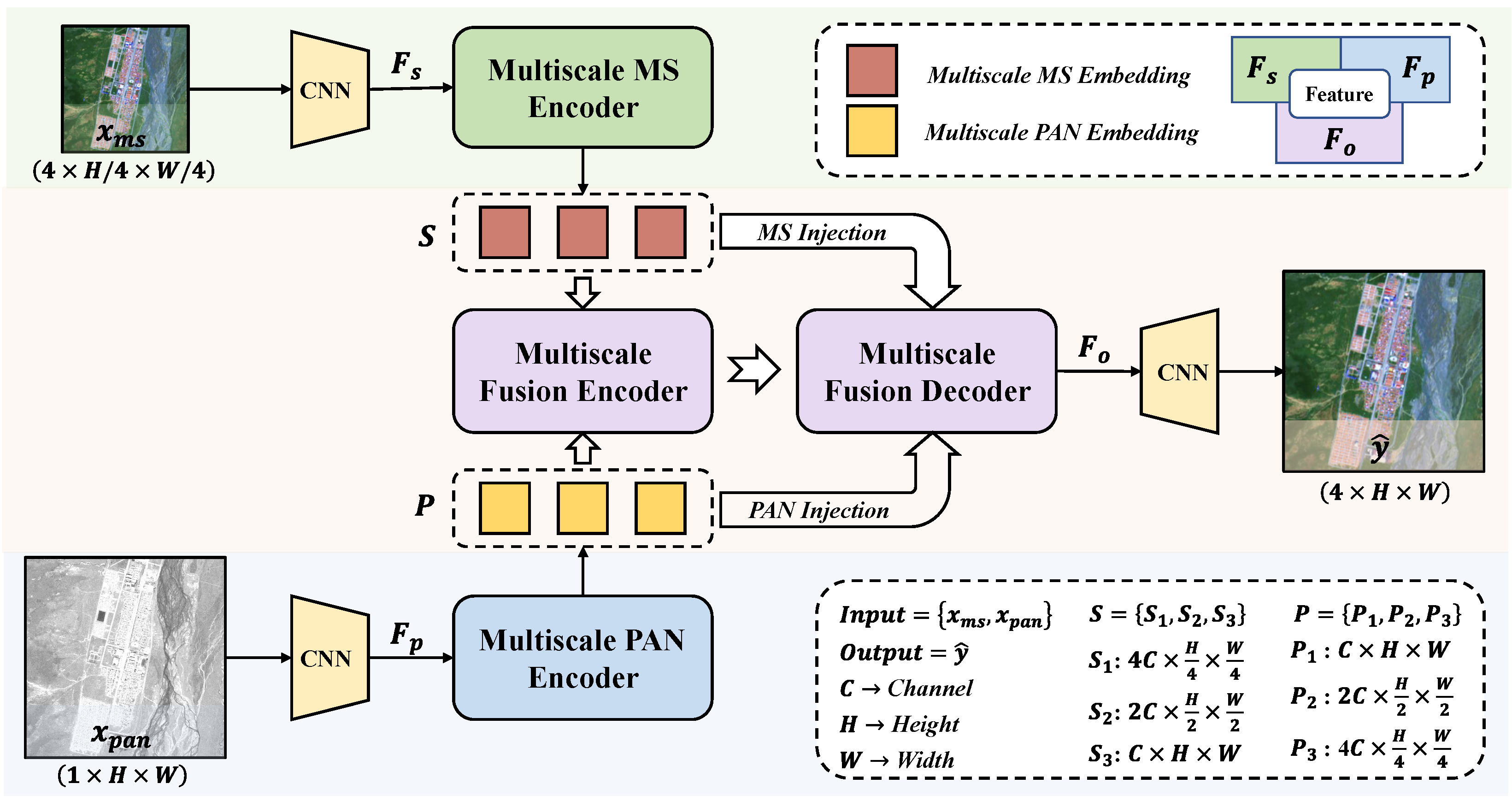

Towards Robust Pansharpening: A Large-Scale High-Resolution Multi-Scene Dataset and Novel Approach Shiying Wang, Xuechao Zou, Kai Li, Junliang Xing, Tengfei Cao, Pin Tao. Remote Sensing 2024.

The sound demixing challenge 2023–Cinematic demixing track. Stefan Uhlich, Giorgio Fabbro, Masato Hirano, Shusuke Takahashi, Gordon Wichern, Jonathan Le Roux, Dipam Chakraborty, Sharada Mohanty, Kai Li, Yi Luo, Jianwei Yu, Rongzhi Gu, Roman Solovyev, Alexander Stempkovskiy, Tatiana Habruseva, Mikhail Sukhovei, Yuki Mitsufuji. ISMIR 2024.

High-Fidelity Lake Extraction via Two-Stage Prompt Enhancement: Establishing a Novel Baseline and Benchmark. Ben Chen, Xuechao Zou, Kai Li, Yu Zhang, Junliang Xing, Pin Tao ICME 2024. Niagra Falls, Canada

An Audio-Visual Speech Separation Model Inspired by Cortico-Thalamo-Cortical Circuits. Kai Li, Fenghua Xie, Hang Chen, Kexin Yuan, Xiaolin Hu. TPAMI 2024.

RTFS-Net: Recurrent time-frequency modelling for efficient audio-visual speech separation. Samuel Pegg*, Kai Li*, Xiaolin Hu. ICLR 2024. Vienna, Austria.

DiffCR: A Fast Conditional Diffusion Framework for Cloud Removal From Optical Satellite Images. Xuechao Zou*, Kai Li*, Junliang Xing, Yu Zhang, Shiying Wang, Lei Jin, Pin Tao. TGRS 2024.

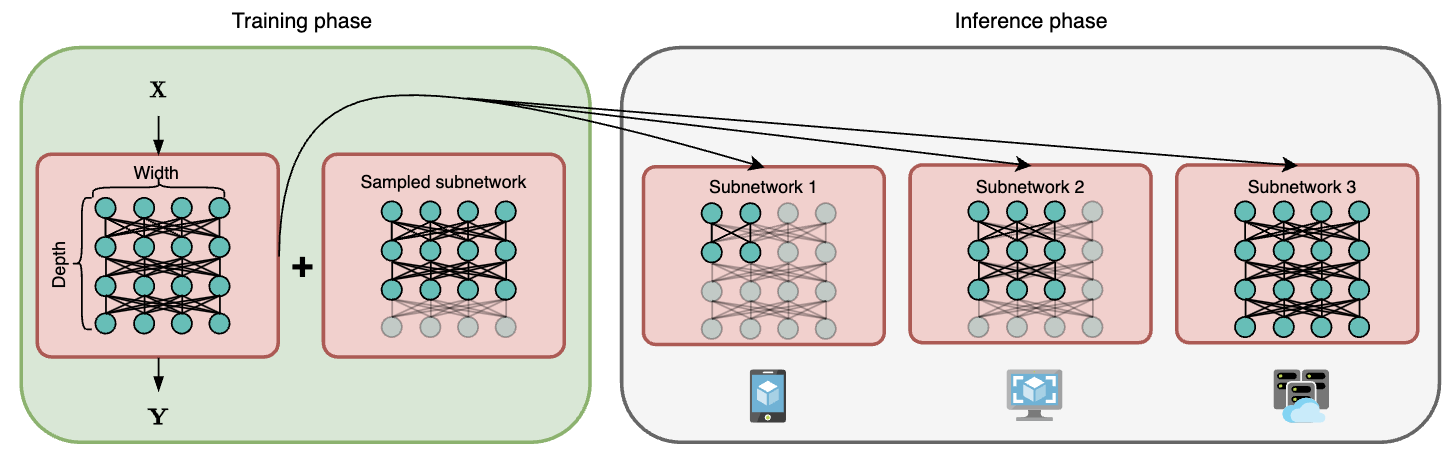

Subnetwork-to-go: Elastic Neural Network with Dynamic Training and Customizable Inference. Kai Li, Yi Luo. ICASSP 2024. Seoul, Korea.

- A method for training neural networks with dynamic depth and width configurations, enabling flexible extraction of subnetworks during inference without additional training.

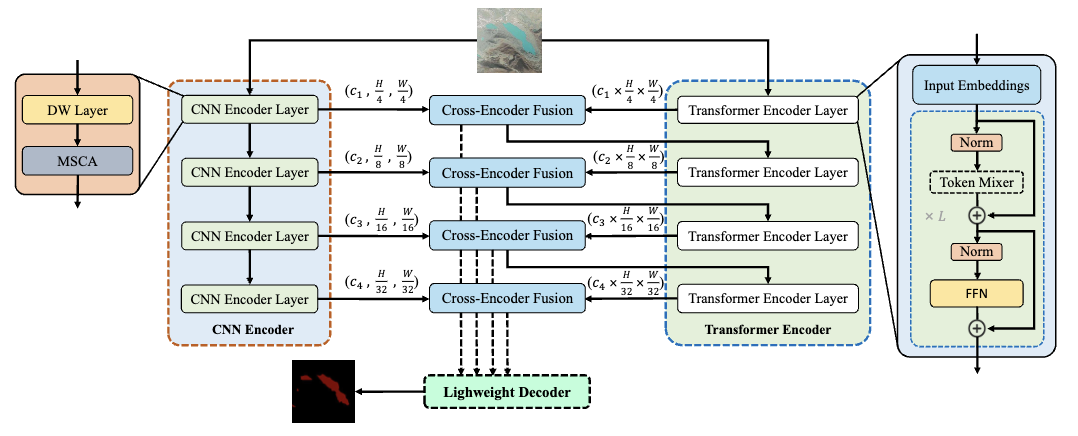

LEFormer: A Hybrid CNN-Transformer Architecture for Accurate Lake Extraction from Remote Sensing Imagery. Ben Chen, Xuechao Zou, Yu Zhang, Jiayu Li, Kai Li, Pin Tao. ICASSP 2024. Seoul, Korea.

2023

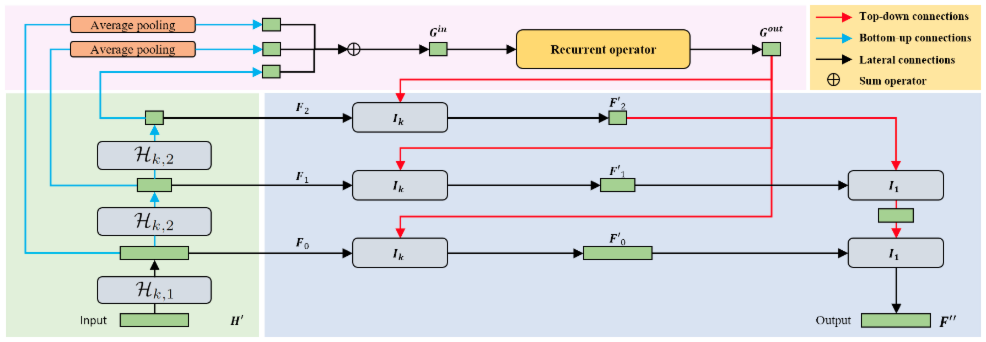

TDFNet: An Efficient Audio-Visual Speech Separation Model with Top-down Fusion. Samuel Pegg*, Kai Li*, Xiaolin Hu. ICIST 2023. Cairo, Egypt.

-

TDFNet is a cutting-edge method in the field of audio-visual speech separation. It introduces a multi-scale and multi-stage framework, leveraging the strengths of TDANet and CTCNet. This model is designed to address the inefficiencies and limitations of existing multimodal speech separation models, particularly in real-time tasks.

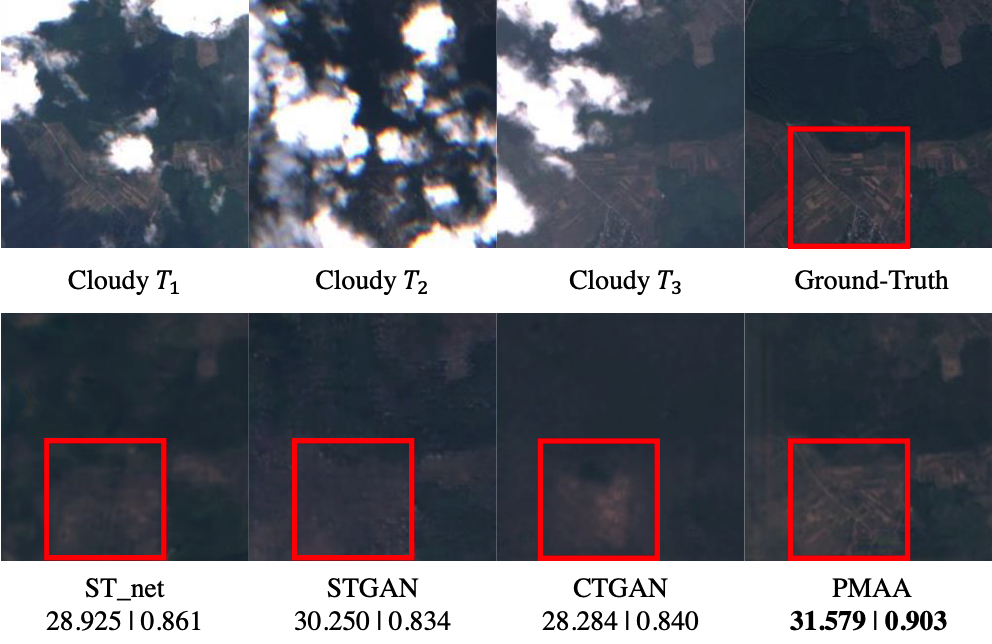

PMAA: A Progressive Multi-scale Attention Autoencoder Model for High-Performance Cloud Removal from Multi-temporal Satellite Imagery. Xuechao Zou*, Kai Li*, Junliang Xing, Pin Tao#, Yachao Cui. ECAI 2023. Kraków, Poland.

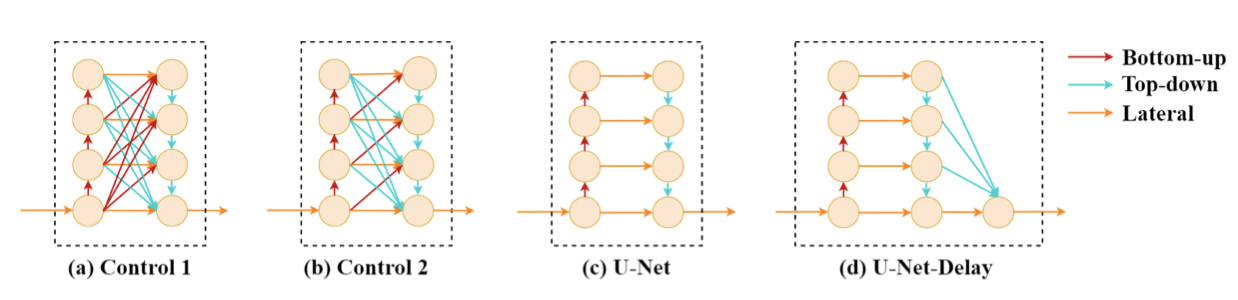

An efficient encoder-decoder architecture with top-down attention for speech separation. Kai Li, Runxuan Yang, Xiaolin Hu. ICLR 2023. Kigali, Rwanda.

One-page Report for Tencent AI Lab’s CDX 2023 System. Kai Li, Yi Luo. SDX Workshop 2023. Paris, France.

- We present the system of Tencent AI Lab for the Cinematic Sound Demixing Challenge 2023, which is based on a novel neural network architecture and a new training strategy.

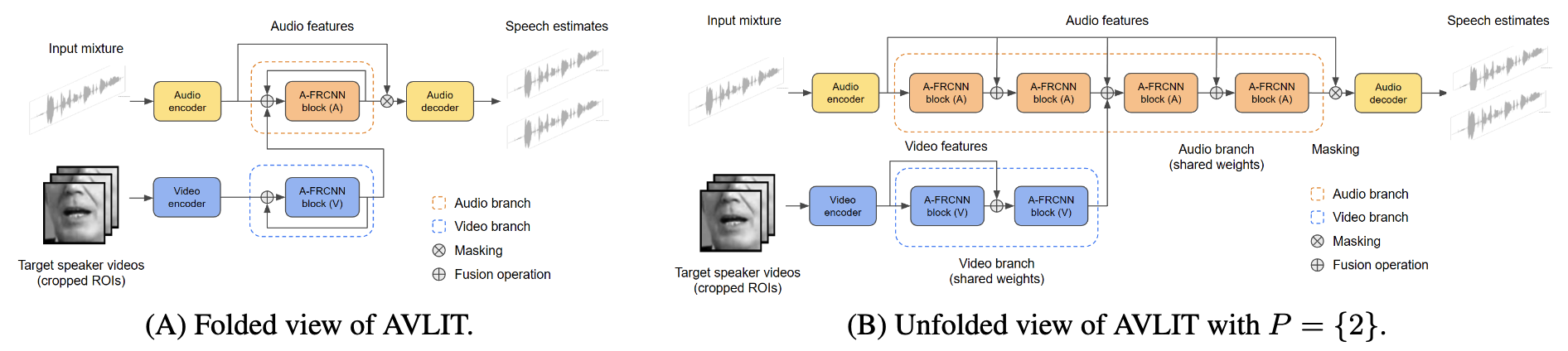

Audio-Visual Speech Separation in Noisy Environments with a Lightweight Iterative Model. Héctor Martel, Julius Richter, Kai Li, Xiaolin Hu and Timo Gerkmann. Interspeech 2023. Dublin, Ireland.

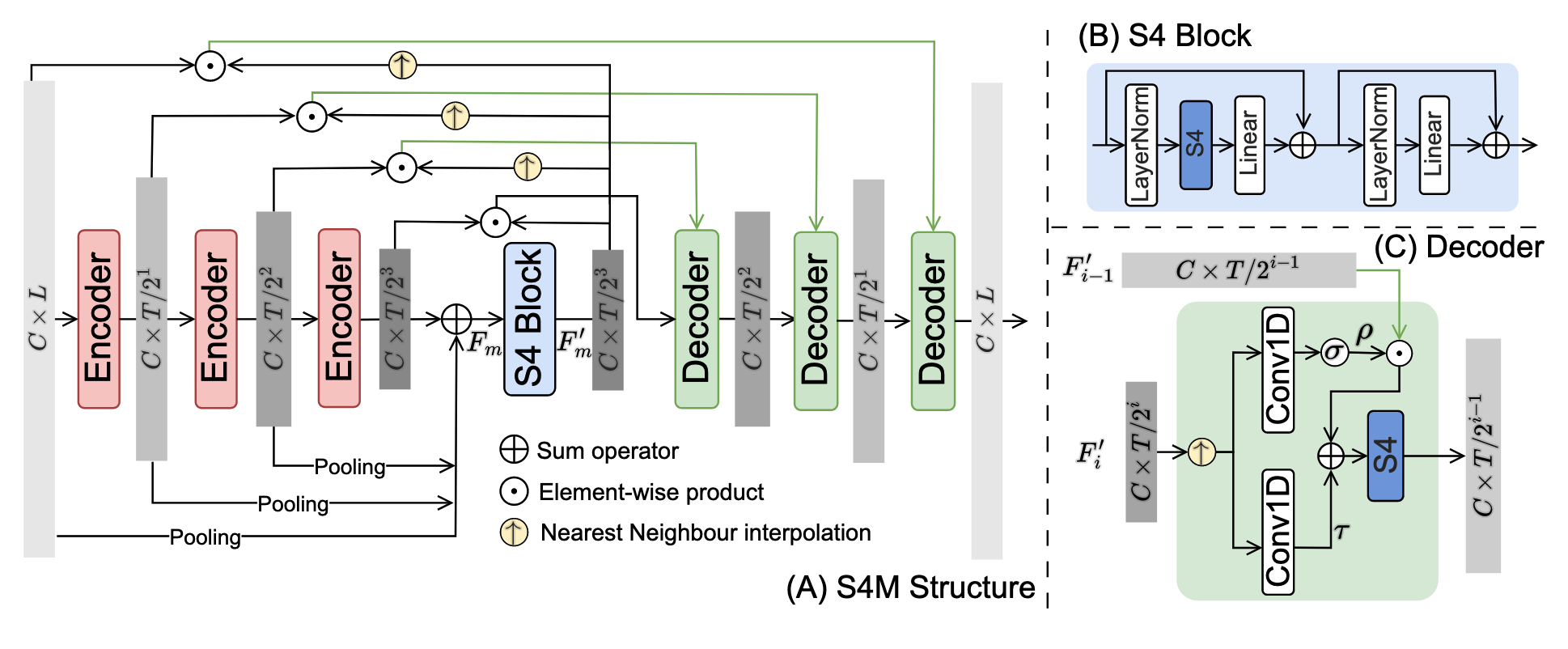

A Neural State-Space Model Approach to Efficient Speech Separation. Chenchen, Chao-Han Huck Yang, Kai Li, Yuchen Hu, Pin-Jui Ku and Eng Siong Chng. Interspeech 2023. Dublin, Ireland.

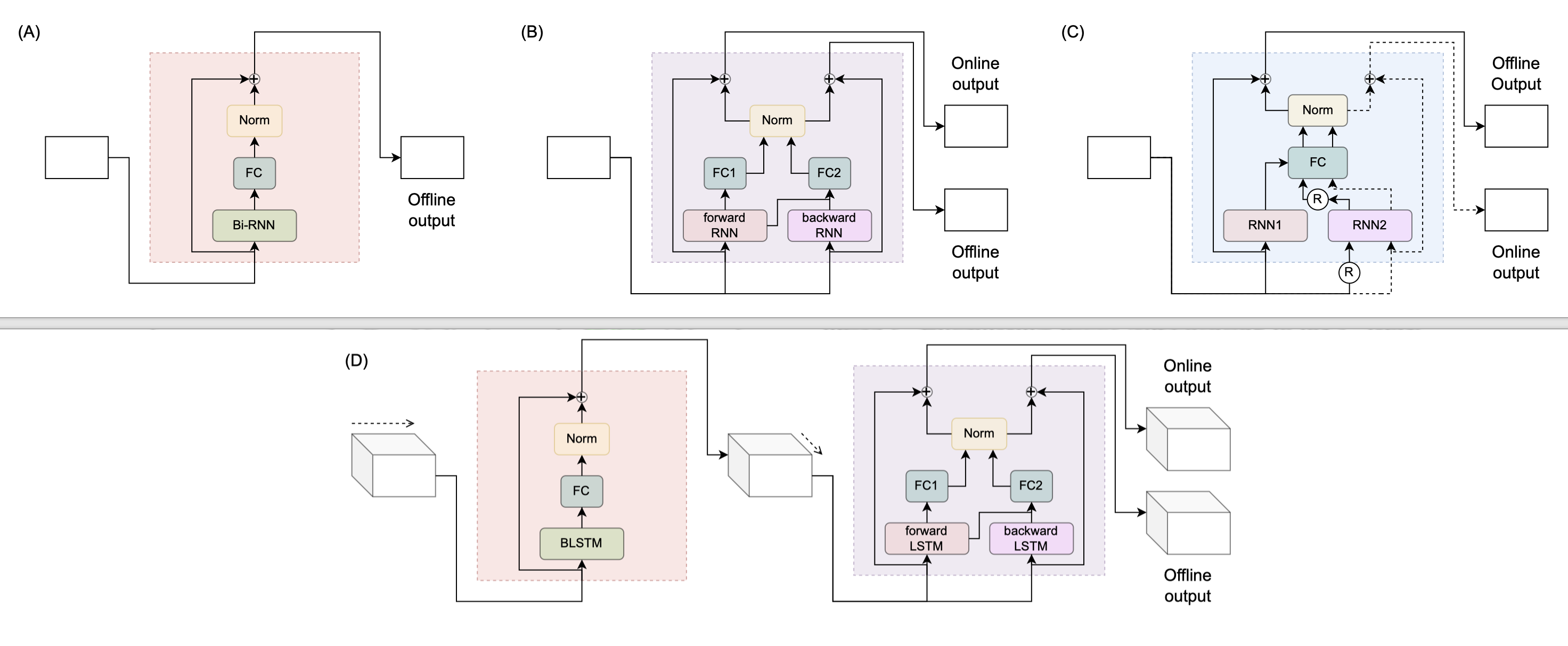

On the Design and Training Strategies for RNN-based Online Neural Speech Separation Systems. Kai Li, Yi Luo. ICASSP 2023. Melbourne, Australia.

- The paper explores converting RNN-based offline neural speech separation systems to online systems with minimal performance degradation.

2022

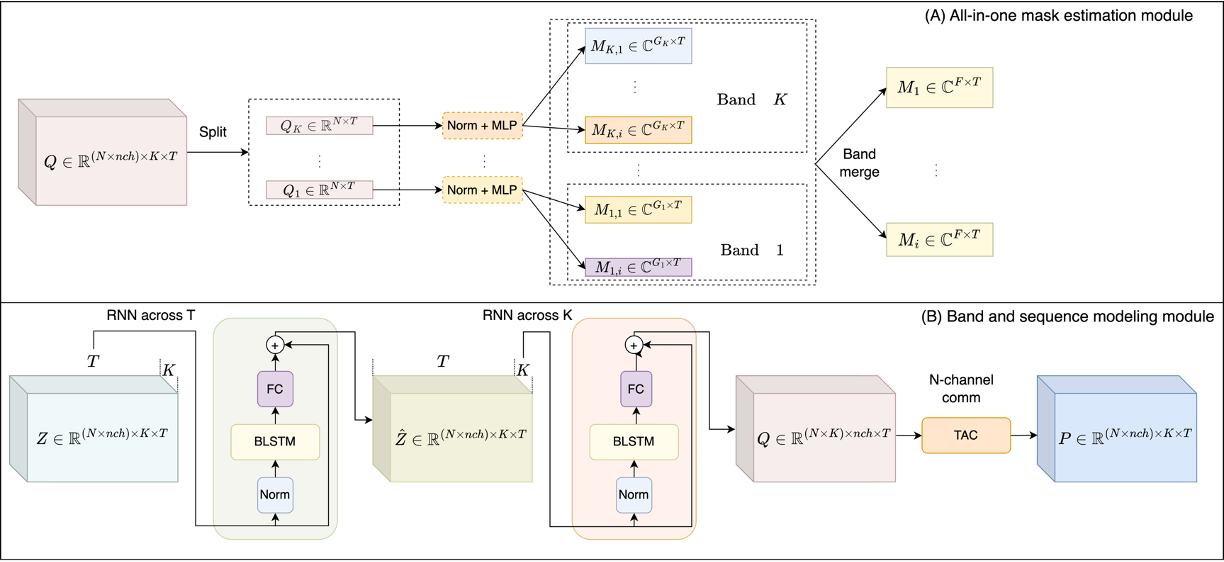

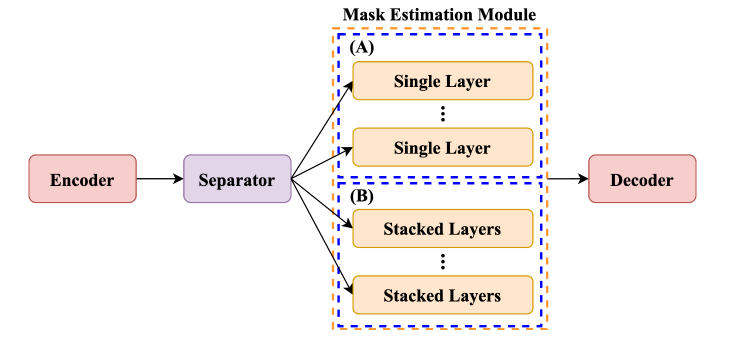

On the Use of Deep Mask Estimation Module for Neural Source Separation Systems. Kai Li, Xiaolin Hu, Yi Luo. InterSpeech 2022. Incheon, Korea.

- We propose a Deep Mask Estimation Module for speech separation, which can improve the performance without additional computional complex.

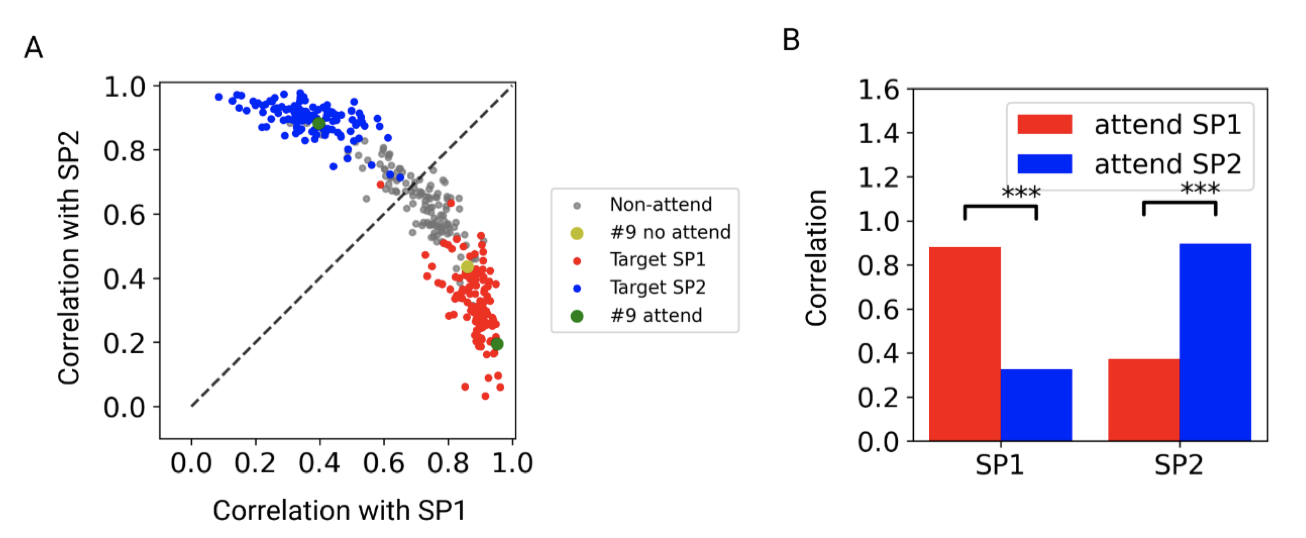

Inferring mechanisms of auditory attentional modulation with deep neural networks Ting-Yu Kuo, Yuanda Liao, Kai Li, Bo Hong, Xiaolin Hu. Neural Computation, 2022.

2021

Speech Separation Using an Asynchronous Fully Recurrent Convolutional Neural Network. Xiaolin Hu*, #, Kai Li$^*$, Weiyi Zhang, Yi Luo, Jean-Marie Lemercier, Timo Gerkmann. NeurIPS 2021. Online.

2020 and Prior

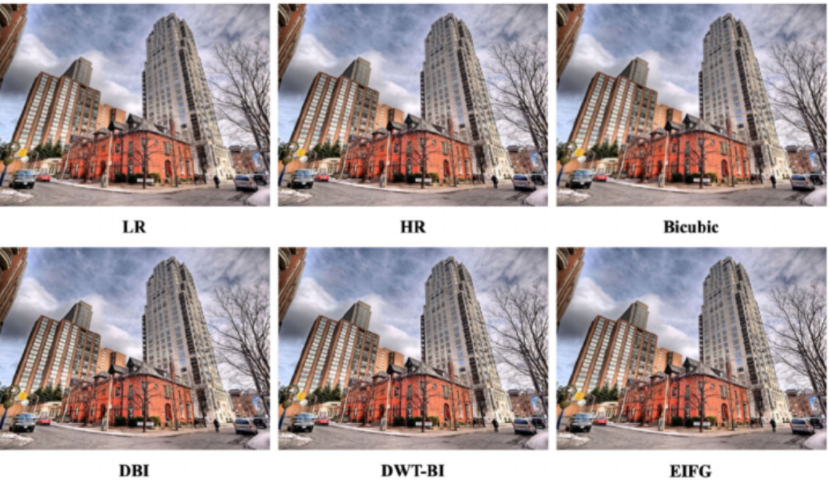

A Survey of Single Image Super Resolution Reconstruction. Kai Li, Shenghao Yang, Runting Dong, Jianqiang Huang#, Xiaoying Wang. IET Image Processing 2020.

- This paper provides a comprehensive survey of single image super-resolution reconstruction methods, including traditional methods and deep learning-based methods.

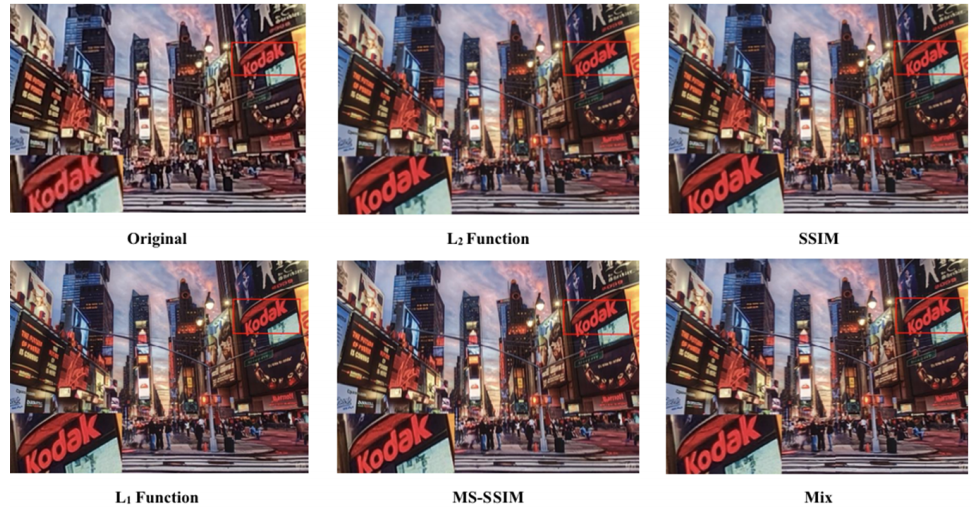

Single Image Super-resolution Reconstruction of Enhanced Loss Function with Multi-GPU Training. Jianqiang Huang*, #, Kai Li$^*$, Xiaoying Wang.

- This paper proposes a multi-GPU training method for single image super-resolution reconstruction, which can significantly reduce training time and improve performance.

🎖 Honors and Awards

- 2025.09 Third Prize in CCF Advanced Audio Technology Competition (Task 2)

- 2024.12 Outstanding Master’s Thesis Award from China Society of Image and Graphics

- 2024.08 Winner of the Best Student Presentation Award in NCMMSC 2024

- 2024.06 Outstanding Graduate of Beijing

- 2024.06 Excellent Master Thesis of Tsinghua University

- 2023.10 Xueye Scholarship

- 2023.10 Longhu Scholarship

- 2020.06 Outstanding Bachelor Thesis Award (Top 1%)

- 2020.06 Outstanding Graduates (Top 1%)

- 2019.11 National Scholarship (Top 1%)

📖 Educations

- 2024.09 - now, Ph.D., Tsinghua University, Beijing.

- 2021.09 - 2024.06, Master, Tsinghua University, Beijing.

- 2016.09 - 2020.06, Undergraduate, Department of Computer Technology and Application, Qinghai Univeristy, Xining.

- 2013.09 - 2016.06, Zhengzhou fourth Middle School, Zhengzhou.

💻 Internships

- 2025.03 - 2025.08, Bytedance Seed AI, Beijing.

- 2022.10 - 2024.06, Tencent AI Lab, Beijing.

- 2021.07 - 2022.01, Tencent AI Lab, Beijing.

- 2020.09 - 2021.01, Moyincloud, Beijing.

🏁 Services

- Conference Reviewer: Inetrspeech 2023/2024, ICASSP 2023/2024/2025, NeurlPS 2023/2024, ECAI 2023, ICLR 2024/2025, AAAI 2025.

- Journal Reviewer: TASLP, TPAMI

🧑🏫 Teaching

- 2025 Fall, Head TA in Introduction to Deep Learning (00240332), instructed by Prof. Xiaolin Hu

- 2023 Fall, Head TA in Neurological and Cognitive Computing (80240642-0), instructed by Prof. Xiaolin Hu

- 2022 Fall, Head TA in Introduction to Deep Learning (00240332), instructed by Prof. Xiaolin Hu

© Kai Li | Last updated: November. 5th, 2025 | Theme by Yi Ren

-0084FF.svg)

-0084FF.svg)